I recently had the good fortune to take on a really fun project at work. First off, the client was incredibly easy to work with, which makes any project (even something I might consider tedious and boring, like migration work) a win in my book. In any case, this wasn’t a boring project – the client asked us to roll out Cloud Custodian across their entire AWS footprint – which at this point consists of an AWS Organization with a decent number of accounts (and more to follow).

While I had never used it before, I was aware of c7n-org (a member of the overall Cloud Custodian product suite) and its design goal of allowing a user to run custodian across multiple accounts (strictly speaking, the use of AWS Organizations is not a pre-requisite to the use of c7n-org, but there are benefits if you do, such as having c7n-org generate the YAML file that it itself uses to map out what accounts it will run against, or the use of StackSets to orchestrate IAM roles in child/spoke accounts for custodian to run under).

My solution itself and the deployment mechanisms I designed for it worked fairly well overall. These included the use of StackSets to provision IAM roles for custodian to run under in child accounts and a few stacks in the main “security” account (where most of Custodian’s standalone assets, like c7n-mailer and its backing resources, and something to run c7n-org and the backing resources for that). Outside of AWS, I stitched together a pretty nice policy-authoring tool using Python leveraging Jinja to pull out common config to inject those values into policy template based on a dev/prod split, as well as the capability to aggregate policies in multiple files (authored as YAML files) into a single document under a unified polices key that c7n-org could ingest (for reference, c7n-org run can only accept a single policy file as an argument, in contrast to the more standard custodian run, which can be pointed at a directory full of policy files).

So, all down to setting up something to run c7n-org… As I mentioned, my first inclination was to try it out in a Lambda function. After reading a post in cloud-custodian’s gitter channel from a gent who indicated that he had pulled off this feat previously (and maybe he did, possible with the changes in c7n-org producing the problems I ran into having been added at some later point in time – version as of the time I encountered this issue is 0.5.7), I thought I was in good shape (the trick lies in that c7n-org is basically a CLI tool, so running it from a Lambda is a bit tricky). There were some kinks to work out in actually being able to invoke c7n-org run, but I was able to work those out. Unfortunately, I worked those out only to then discover this error in my CloudWatch logs…

b'Traceback (most recent call last):\n File "/var/task/c7n-org", line 10, in <module>\n sys.exit(cli())\n File "/var/task/click/core.py", line 829, in __call__\n return self.main(*args, **kwargs)\n File "/var/task/click/core.py", line 782, in main\n rv = self.invoke(ctx)\n File "/var/task/click/core.py", line 1259, in invoke\n return _process_result(sub_ctx.command.invoke(sub_ctx))\n File "/var/task/click/core.py", line 1066, in invoke\n return ctx.invoke(self.callback, **ctx.params)\n File "/var/task/click/core.py", line 610, in invoke\n return callback(*args, **kwargs)\n File "/var/task/c7n_org/cli.py", line 636, in run\n with executor(max_workers=WORKER_COUNT) as w:\n File "/var/lang/lib/python3.7/concurrent/futures/process.py", line 556, in __init__\n pending_work_items=self._pending_work_items)\n File "/var/lang/lib/python3.7/concurrent/futures/process.py", line 165, in __init__\n super().__init__(max_size, ctx=ctx)\n File "/var/lang/lib/python3.7/multiprocessing/queues.py", line 42, in __init__\n self._rlock = ctx.Lock()\n File "/var/lang/lib/python3.7/multiprocessing/context.py", line 67, in Lock\n return Lock(ctx=self.get_context())\n File "/var/lang/lib/python3.7/multiprocessing/synchronize.py", line 162, in __init__\n SemLock.__init__(self, SEMAPHORE, 1, 1, ctx=ctx)\n File "/var/lang/lib/python3.7/multiprocessing/synchronize.py", line 59, in __init__\n unlink_now)\nOSError: [Errno 38] Function not implemented\n'

The important part in all that? I honed in immediately on the OSError: [Errno 38] Function not implemented.

So, what the heck is OS Error 38?

A quick Google for OS Error 38, python, and Lambda quickly turned up some interesting info. I checked SO first, where I found this post. A bit more digging turned up that Python’s multiprocessing module’s Queue implementation expects /dev/shm to be available. There is a quite long-running AWS Support thread on this very issue. Long-story short: if you want to use multiprocessing and run it in Lambda, don’t use their Queue implementation. This is all well and fine if you’re the one writing the code; otherwise, you either maintain your own fork (and deal with the implications of changing the implementation), or find another way to run it.

Docker and AWS Batch to the Rescue

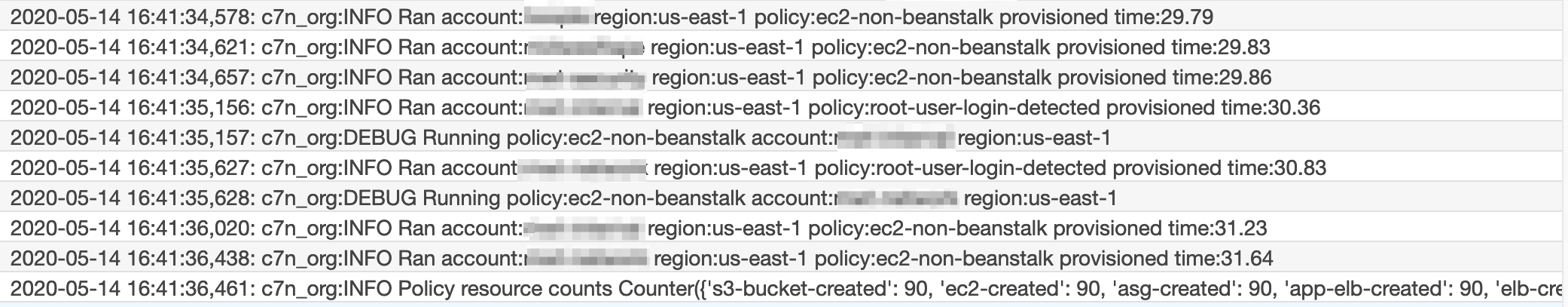

I decided to throw in the towel on the Lambda-based solution and pull out a trick that’s worked in the past. Using LambCI’s docker-lambda, I quickly containerized the code I had authored for Lambda. Drawing on some previous work I had done with Batch, I was able to get a Batch Compute Environment, Queue, and Task Def set up in relatively short order – so, while not Lambda, still “serverless”. While there was some additional experimentation to be done setting parallelization flags, choosing proper instance sizes, etc., I can tell you that it is pretty straightforward to run c7n-org as a Lambda function (even if you have to shim it into a container!). No OS Error 38, just lots of nice log output – roll that beautiful log footage!

That looks more like it!

A few more links related to Python, multiprocessing, and OS Error 38: